The beginner’s guide to AI model architectures

Unlike an onion, hopefully these neural network layers won't make you cry.

Let’s give a warm welcome to Nicole, our newest correspondent at Technically. Nicole studied Computer Science at Duke with a concentration in AI, led cutting edge research at a robotics lab on campus, and will be writing deep dives on how AI works.

- Justin

TL;DR

Architectures are the blueprints for AI models: they dictate how models are designed and built

Most AI today is made up of computing units called neurons linked together in complex networks

There are a million ways to build these networks: different algorithms, structures, and sizes

Researchers match different architectures to the specific problems and data constraints they face

Have you ever wondered how AI models get designed? Or what makes a Large Language Model like the one behind ChatGPT different from a Computer Vision model used for self-driving cars? Isn’t it all just AI under the hood?

The answer boils down to model architecture. Architectures are the blueprints of AI models – they are the sum of all the decisions whoever is building the model makes about what algorithms, data, sizes, and other stuff goes into said model. There are tons and tons of ways to build an AI model: a particular architecture just chooses one (or multiple, but more on that later).

Picking the right architecture for your domain is really important. The basic 101 tagline for how AI models get built goes something like, “AI uses really complicated math to learn patterns from really large quantities of data.” This explanation isn’t wrong, but it’s only half the story. The other half? Smart architecture design.

To understand model architectures and how they work, we have to start with the neuron – the building block of every advanced AI model out there today. This post will explain what a neuron is and how researchers and engineers piece these neurons together to build complex systems capable of incredibly challenging tasks. We’ll touch on some of the most popular architecture types, exploring what makes them really good at some tasks (and not so good at others).

Neurons: the building blocks of AI

Neurons are the basic building blocks of AI architectures, modeled after the actual biological neurons that transmit signals throughout the human brain. Remember, AI models are essentially pattern investigators; they find the underlying pattern in the data. You can think of these neurons as the mathematical functions that are doing this hard investigative work, getting into the weeds of the data and figuring out what’s going on.

The math performed by individual neurons is actually pretty simple – it’s usually just basic multiplication and addition that you could do with a calculator. So how are AI models able to capture such complex patterns, like the ones involved in language and vision? The trick is to string together a lot of neurons – like hundreds of millions of them.

This stringing together is where our first “decision” – and thus the early stages of an architecture – starts to come into play. Researchers can combine neurons in two ways.

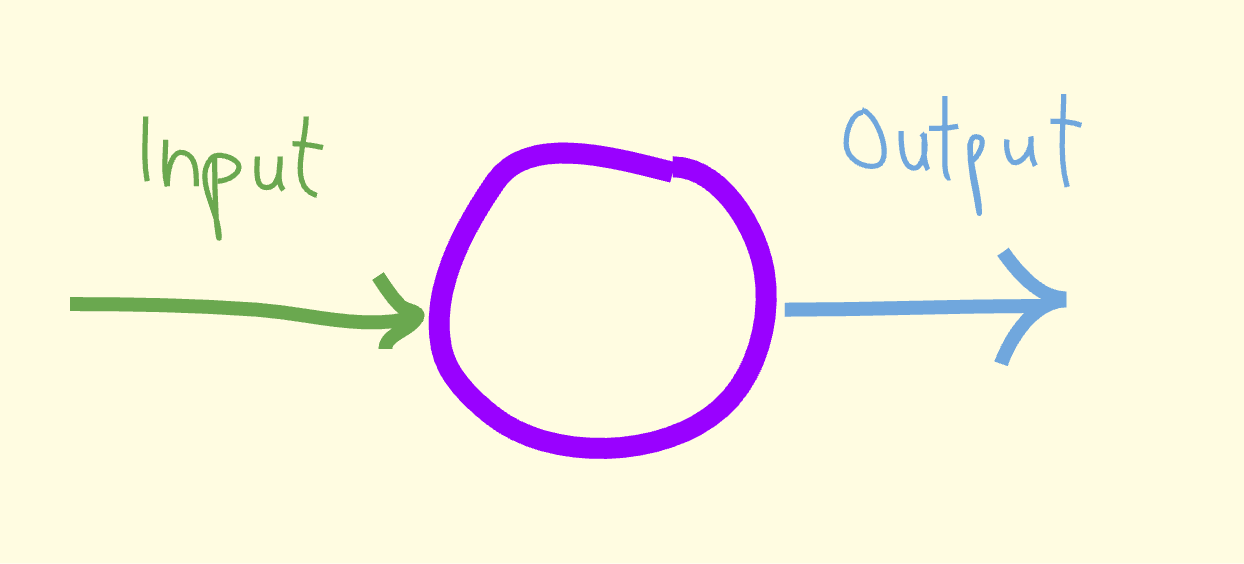

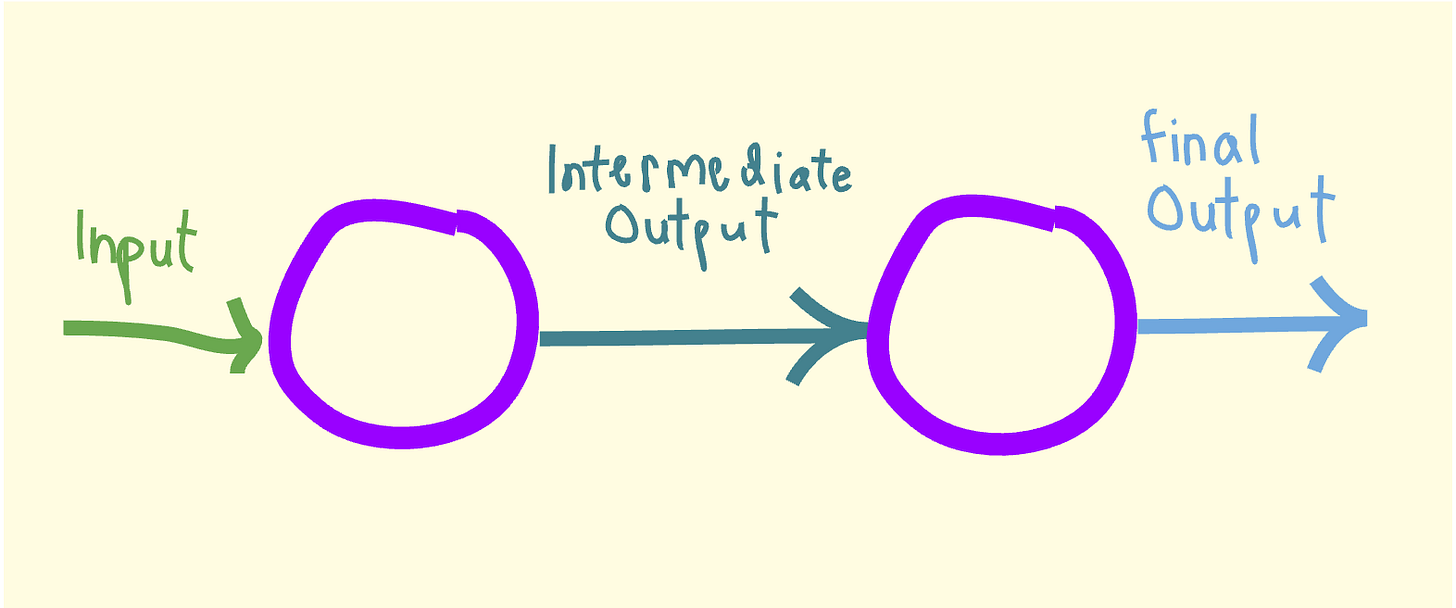

First, neurons can be lined up in a sequence, so the output of one becomes the input of the next.

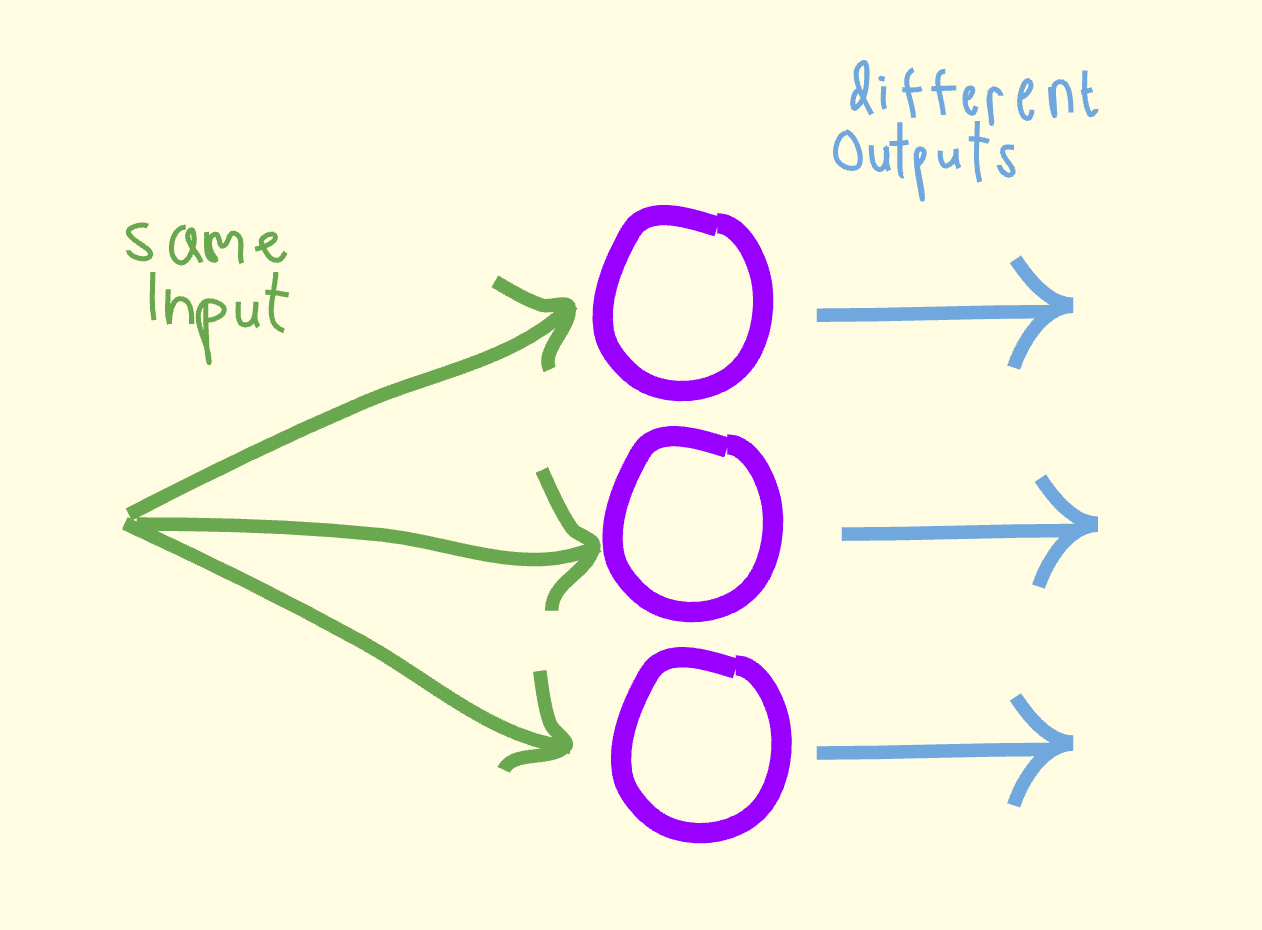

Neurons can also be stacked in layers, where they don’t interact directly but take the same input values.

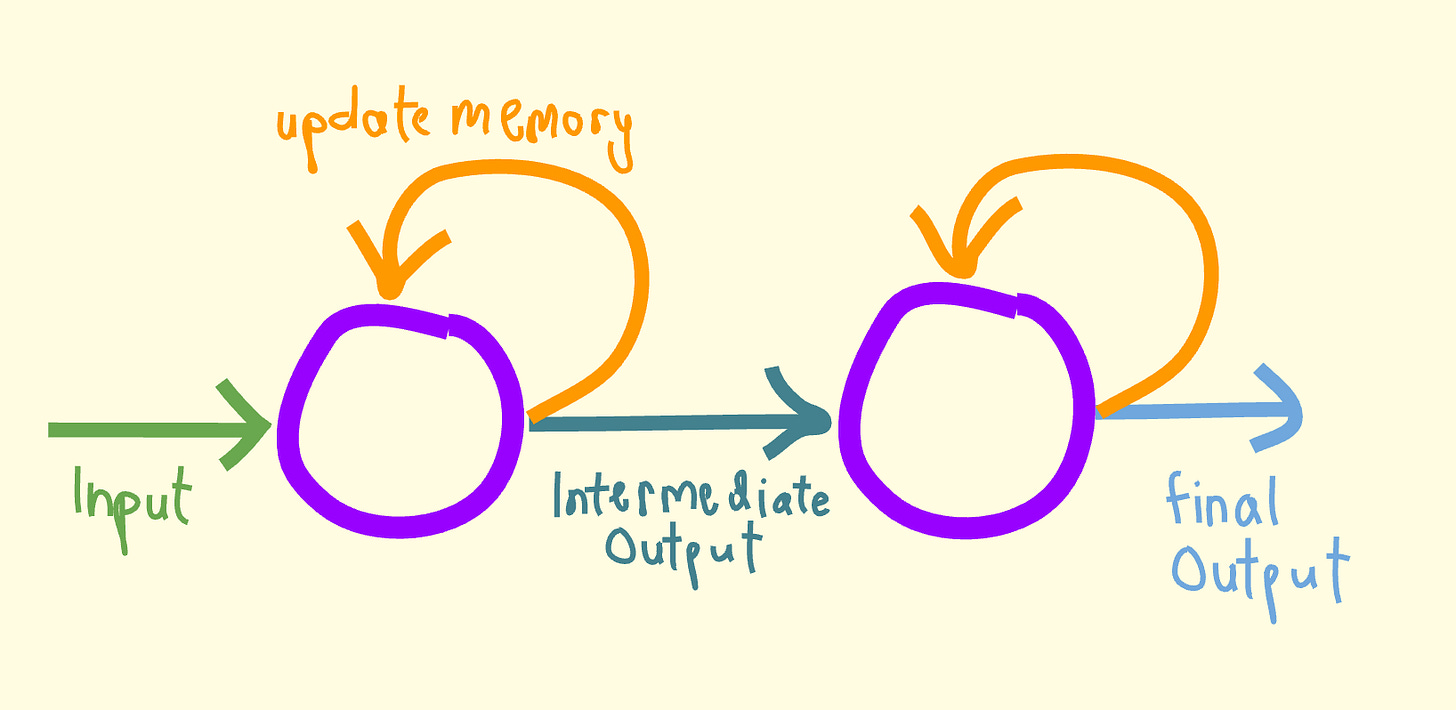

Some special neurons can even accept their own output and use it to update their internal function, in a kind of simulated memory. This is helpful when you’re handling a sequence of data inputs, like a bunch of frames from the same video, and you want your model to use knowledge from earlier frames to contextualize what’s happening in later frames.

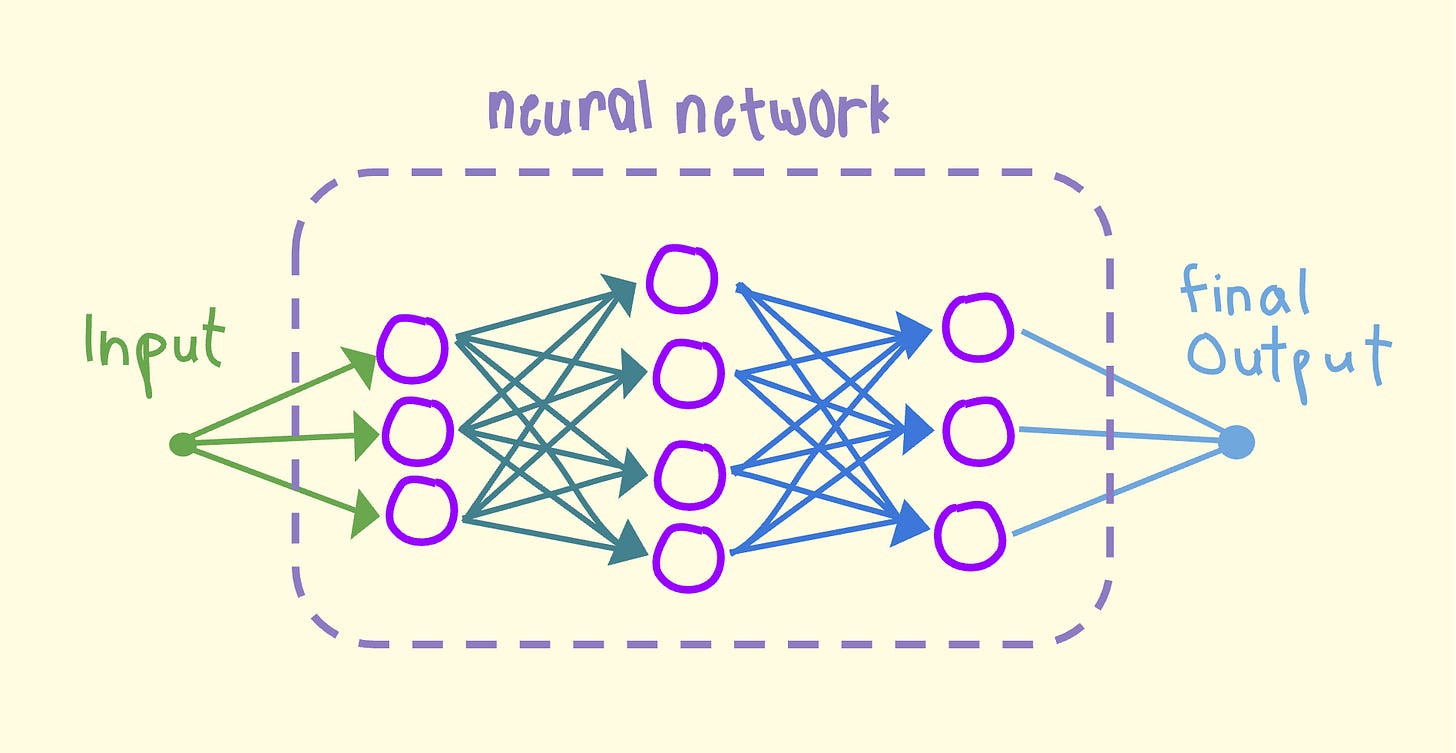

Put these configurations together, and you’ve got an Artificial Neural Network – the most basic model architecture. Neural networks are just layers (stacks of neurons) arranged in a sequence.

Different networks might follow different rules: in the setup above, a neuron accepts input from every single neuron in the layer preceding it. This is what computer scientists call a fully connected network (actually, the configuration pictured has an even more specific name: the Feedforward Neural Network, or FNN). But networks can also be partially connected, meaning that neurons selectively accept input from neurons in the previous layer.

You might notice that – just like an individual neuron – a neural network takes an input and returns an output. The architecture itself can be treated like a big mathematical function, and used as part of an even larger architecture. Kind of like these.

This is where model architectures get really interesting.