Technically Monthly (January 2026)

Comparing vibe coding tools, making sure your AI workflows don’t hallucinate into oblivion, and why careless AI use might actually get you replaced.

Hello distinguished Technically readers,

My sources tell me it’s not too late to say happy new year.

2025 was the year everyone started to use AI at work. 2026 is the year we figure out how to get the most out of it, vibe code things that actually work, and steer clear of hallucinations (unless you’re into that kind of thing, in which case you might get oneshotted by ayahuasca).

So here’s what we’ve been up to for the past month.

New on Technically

2026 vibe coding tool comparison

Available as a free post on Substack (courtesy of Vercel) and now in its permanent home in the AI, it’s not that Complicated knowledge base.

All hail vibe coding, savior of the Ideas Guy. But which vibe coding tool should you use? I tested 4 of the most popular options by building the same app on each: an internal dashboard for Vandelay Industries’ potato chip import/export operations.

For each I cover how well they work, any quirks to watch out for, plus pricing and how far you can get on a free plan.

The AI user’s guide to evals

Available as a paid preview on Substack, and now in its permanent home in the AI, it’s not that Complicated knowledge base.

As non-technical people build more and more stuff with AI they are starting to run into the same issues as software engineers – it’s hard to know when things are working or not. This guide to evals gives you the background you need to start adding effective monitoring to your AI system.

Based on my conversation with eval expert Hamel Husain, this post covers:

Why evals matter: moving from “I think the bot is getting better” to “hallucination rate dropped from 15% to 3% this week”

Look at the data: why you need to examine your failures before writing fancy tests

Assertions over LLM judges: why simple keyword checks beat complex AI-judging-AI setups

A practical 4-step workflow: vibe check, spreadsheet, simple fixes, then targeted evals

This one is pretty practical.

AI will replace you at your job if you let it

Also available as a free post on Substack and in the AI, it’s not that Complicated knowledge base.

Like you, I’m extremely tired of the recurring headlines about “AI replacing the workforce,” written almost exclusively by people who know nothing about AI and have never been part of said workforce. And yet… many people are using AI so carelessly that you won’t be able to blame their bosses when they decide AI can do the work in their stead.

I believe that staying ahead is going to mean really taking the time to use AI intelligently, customizing it to your needs, tweaking your prompts, and keeping an iterative mindset. AI will only replace you at your job if you let it.

From the AI Reference: Prompt Engineering

If you weren’t in the loop and got too caught up in the holidays of it all, we recently launched the AI Reference—a companion to the Technically Universe focused specifically on AI concepts. This month’s featured term is one everyone using AI tools should understand:

Prompt Engineering

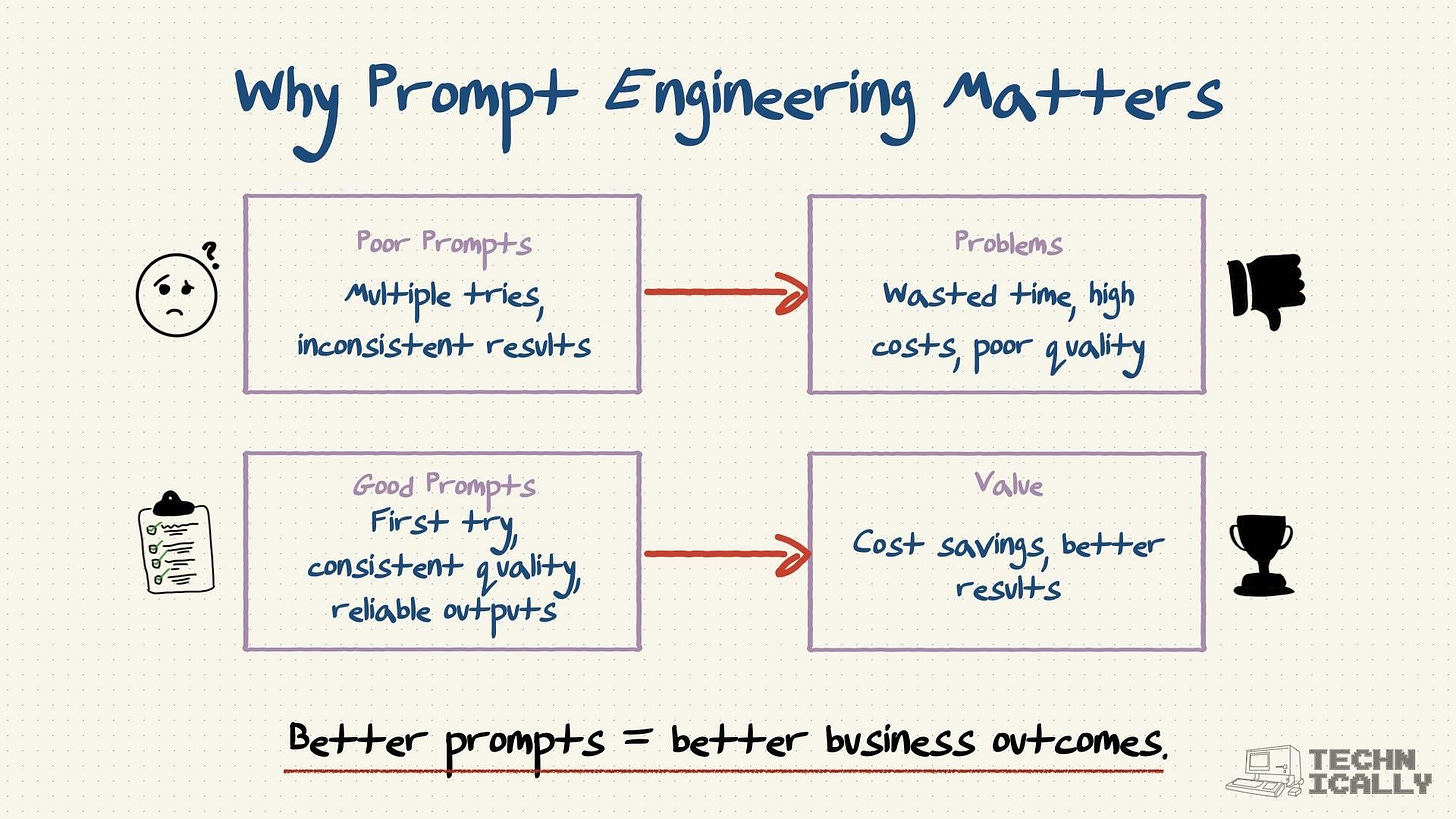

Prompt engineering is the art of talking to AI models in a way that gets you the results you actually want. It’s the practice of crafting inputs that reliably produce the outputs you’re looking for, whether that’s generating marketing copy, analyzing data, or answering customer questions.

Here’s the thing: AI models are incredibly powerful, but they’re also incredibly literal. Ask ChatGPT “write about dogs” and you might get a 500-word essay about canine evolution. Ask it “write 3 bullet points about why dogs make good pets” and you’ll get exactly what you need. That difference in specificity is prompt engineering in action.

Why this matters:

Good prompts can save you money, time, and frustration when working with AI

The more context and structure you provide, the better models can give you what you actually want

Think of it as learning to communicate with a very smart but literal-minded assistant

Key techniques include few-shot prompting, chain of thought reasoning, and setting clear constraints

Coming up this month

We’ve got three posts on deck for January:

AI for PMMs: automating customer segmentation. A look at how product marketing teams can start using AI to segment customers.

What’s fine-tuning? A breakdown of what fine-tuning is and why it comes up so often in AI discussions.

How AI chips get made: An overview of how the hardware behind modern AI systems is produced.

Are you using AI at work?

We want to hear what’s actually working. If you’ve automated a tedious task, built something useful, or helped your team adopt AI without chaos, send us a reply and share your story.