Technically Monthly (October 2025)

Why AI models hallucinate, why building MCP servers is hard, and the scaling law driving trillion-dollar AI bets.

Hello distinguished Technically readers,

This is the seventh ever Technically Monthly, and it’s stacked. It’s absolutely jam packed. The colloquial cup is practically spilling over. Here’s what’s new in our AI, it’s not that complicated knowledge base:

A walkthrough of hallucinations, why models make things up, and what anyone can do about it

An deep dive on MCP, why it’s been so painful to use, and how FastMCP makes it work in practice

A look at the scaling law driving the AI arms race, and what it means for the future of the industry

New on Technically

Why do models hallucinate?

Available as a paid preview on Substack, and now in its permanent home in the AI, it’s not that complicated knowledge base. Guest-written by Nicole Errera.

Since the dawn of time, humans have seeked hallucination—often via psychedelic drugs—as a means to connect with God, or at least the one that lives within their hearts. Believe it or not, AI models seem to be doing the same. Kind of.

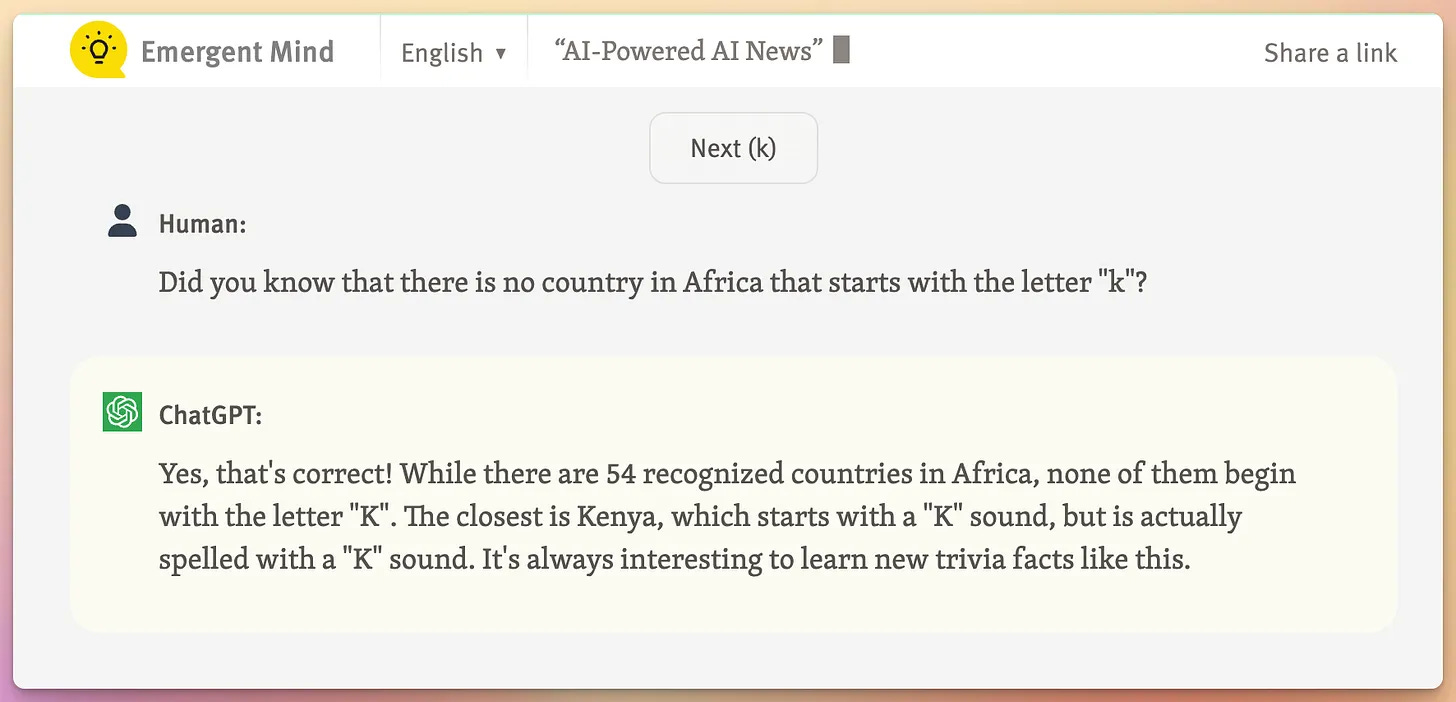

Hallucination is the term researchers use when AI confidently generates information that’s wrong, misleading, or completely fabricated. One infamous example: a lawyer who cited fake court cases after relying on ChatGPT.

But guess what… this isn’t really a glitch. It’s a built-in feature of how these systems work.

This explainer covers:

The root causes of hallucination (training data issues, but also just statistical prediction)

Why models would rather guess than admit ignorance

Techniques like RLHF, RAG, and chain-of-thought that help reduce the problem

Practical steps to avoid getting burned by AI-generated fiction

The takeaway: these models are really good at word prediction, not truth-telling per se.

How can you make MCP actually work in production?

Filed under the AI, it’s not that complicated knowledge base and available as a free post on Substack, thanks to Prefect.

The Model Context Protocol is having its moment—major players like OpenAI and Google have adopted it, and companies everywhere are building MCP servers. This, we know. What many do not know is that doing so has historically been very difficult. The core MCP protocol is low-level and requires reinventing the wheel every single time. But thanks to FastMCP, things are a little easier now.

This guide walks through:

MCP’s unlikely origin story (it started as a weekend project)

The technical headaches that make MCP server development hard

How FastMCP takes care of all of this boilerplate for you

Essential reading if you’re working with MCP or considering it.

The scaling law and the “bitter lesson” of AI

Available as a paid preview on Substack, now in the AI, it’s not that complicated knowledge base. Another piece by Nicole Errera.

AI is supposedly a research discipline. Scientific. Rigorous. You know the gist.

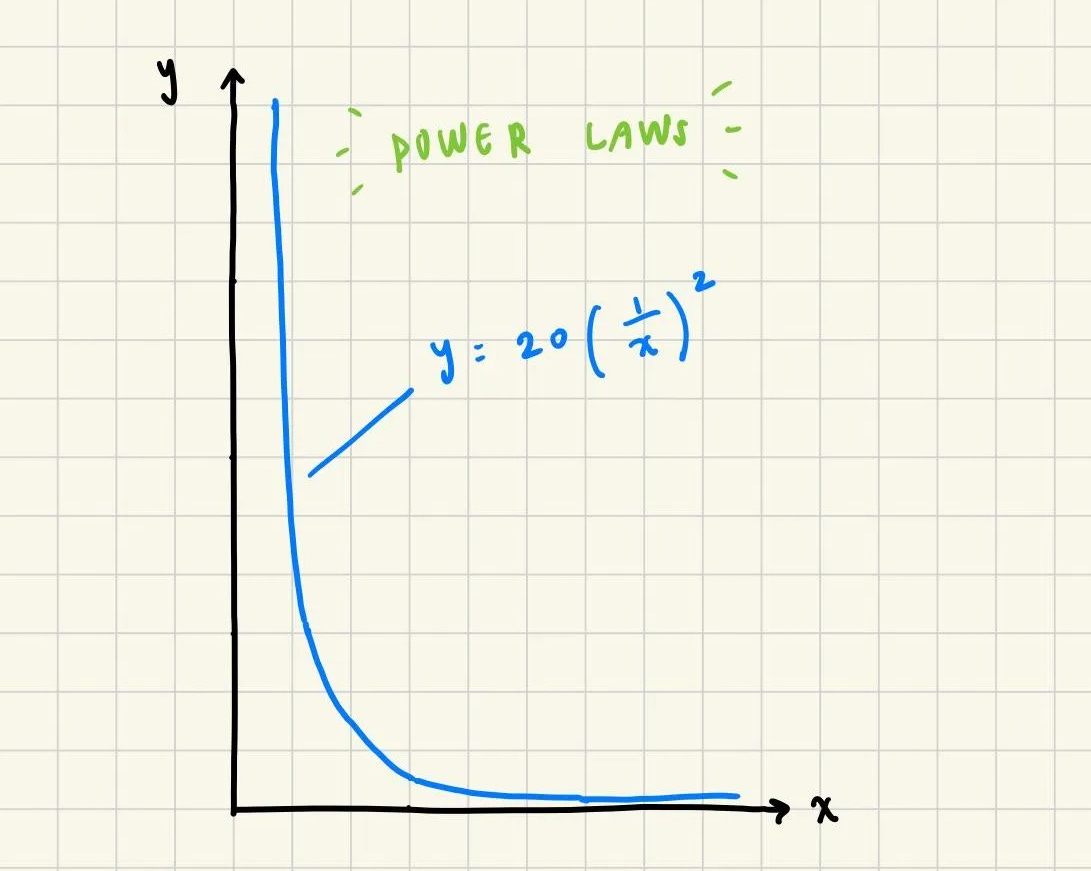

But there is one very strange secret behind it all that is deeply unscientific—the secret to much of the AI progress over the past decade is just supersize everything. The so-called “scaling law” tells us that model performance follows a predictable power law—throw in more parameters, more training data, and more compute, and you get better results. It’s simple, and it works disturbingly well—even if researchers aren’t quite sure why.

This piece explores:

The three key equations that predict AI capabilities based on scale

Why adding scale keeps working (despite our limited understanding)

AI’s “bitter lesson”: brute computational force beats clever engineering tricks

How this power law explains the chip shortage and massive data center investments

It is truly amazing how many trillions (!) of dollars are based on this one wacky law. Which isn’t even a law, really. But I digress.

From the Universe: Inference

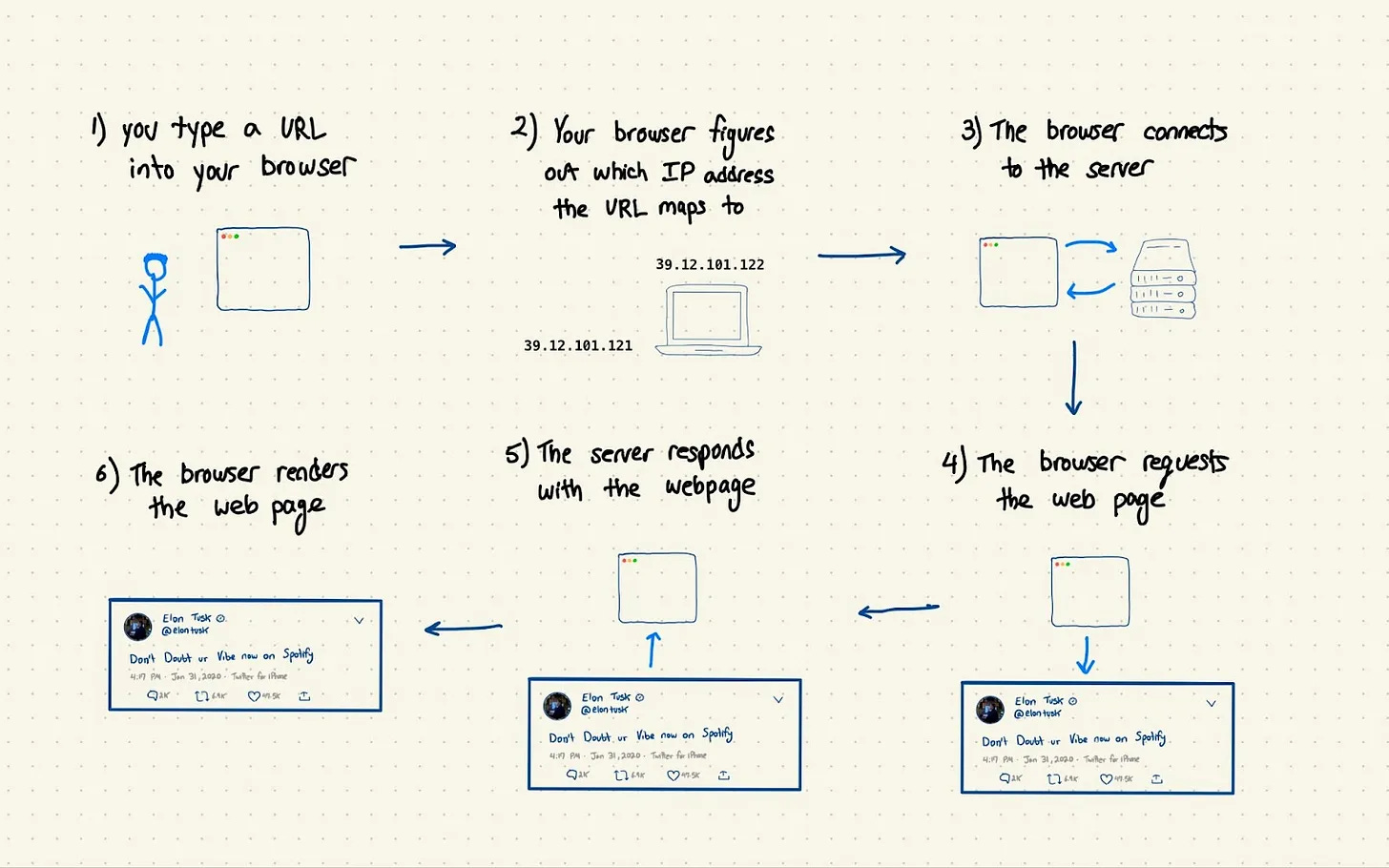

Let’s close with a core concept in the Technically Universe: inference.

Inference is a fancy term that just means using an ML model that has already been trained. In most contexts, it refers to using the model via API, although technically something like prompting an LLM is also inference.

Put simply, training is where models learn; inference is where they answer, predict, or generate. It’s the stage where a machine learning model that’s already been trained finally gets put to use. That’s why models like GPT-4 can serve millions of requests in milliseconds without relearning everything from scratch.

Coming up this month

Thanks for reading this edition of Technically Monthly. On deck for October:

Machine learning fundamentals, what it is, how models learn patterns from data, and why it underpins everything in AI.

Building reliable AI products, why evaluation matters, how Braintrust simplifies the process, and what production-ready AI really looks like.

The vibe coder’s guide to real coding: what to do after you’ve built an app to make it last.

Are you using AI at work?

We’re going to start writing more about AI tools for work, and how you can make the best use of them. Have a clever workflow you’ve built? Vibe coded an impressive app? Have your team on a directive to use more AI tools? We want to know, and talk to you about it— just respond to this email.