Developers hate this one thing (all about code reviews)

Why code reviews take so long, how Al tools like CodeRabbit are speeding them up, and what great teams do to ensure clean code.

Has this ever happened to you? Your developers said it would only take a week to build a feature, but you’re going on week 3 and it’s still not ready yet? And they keep saying that the code is written, but they’re waiting on a review for it?!

Welcome to the wacky, wild world of code reviews. This post is going to go deep on what code reviews are, why they’re important, and why they seem to take longer than they should.

Why we need code reviews in the first place

Code reviews are important because humans aren’t perfect.

Though the myth of the 10x engineer lives on, most commercial projects are built by a team of developers, collaborating on a shared codebase. That app you’re using – be it Gmail, Twitter, Candy Crush, or Instagram – is built and maintained by tons and tons of developers – at least hundreds, and most likely thousands. The larger the company gets, the more developers there are behind the glass.

It probably won’t surprise you that it’s very, very difficult to have that many people working on the same project. The problem is compounded by the fact that many of these folks are across different time zones, have different backgrounds, and have developed different personal styles when writing code. Even worse, some of them might be Europeans.

Under these chaotic conditions, how do you make sure that the code everyone is writing is…good? How do you make sure it runs fast, follows the general standards of the codebase, doesn’t waste any resources, and sets you up with a good foundation to build in the future? If the code developers are writing gets the job done but is hard to use, hard to read, and brittle, you’re going to have issues down the road.

One answer you’re probably already thinking about: testing. Some of the standards your company sets for code quality are objective and thus testable. For example:

Does the code run without any errors?

Does the code run fast?

Is the syntax of the code (spaces, indentation, semi colons) up to specification?

Did you include documentation with your new feature?

This is what CI is all about. Each time a developer tries to merge in new code, it gets automatically tested for all of these things, and it only goes into the live app version if the tests pass.

But some of the things that matter to technical leaders aren’t so clear cut. They’re a little more…subjective. How do you test for code that’s clean? How do you test for code that sets up a sound technical foundation for future features?

🔍 Deeper Look

You’ll often hear about Code Quality: it’s about how “efficient, readable, and usable” code is. There are 100 different ways to build a feature, but not all are created equal. It’s exactly like writing: you can communicate the same idea in 100 ways, but some are more concise, understandable, and elegant than others.

The simple answer is to have a human being review your code before it gets merged in. And this is exactly what code reviews are, at their core: a human being checking your code and making sure it’s good, for lack of a better word. Simple? Yes, perhaps to the common eye. But beneath these calm waters lay sinister mysteries…

How code reviews happen, and why they’re so slow

There are a million different ways to do a code review. But they all fit into 2 groups:

(1) Retroactive (over the shoulder / pass around)

(Did an NFL player name these?) The first kind of review is retroactive: after you write your code it gets manually reviewed by other people on your team. This can happen async, where a fellow engineer reads through your code and leaves a few comments on a Pull Request. But it can also be synchronous: you meet with the reviewer, walking them through your code and why you wrote it the way you did. They’ll probably have a few suggestions for changes.

You might also hear the phrase tool assisted reviews – these are regular retroactive reviews, but use 🤯tools like Gerrit to help reviewers work faster and more efficiently.

(2) Proactive (pair programming)

A lot of people don’t like retroactive code reviews: the reviewer usually lacks context on the feature, and the setup can be inherently uncollaborative. Just imagine that one coworker who keeps requiring you to make changes that you disagree with. And for some reason he also smells like garlic?

An alternative is being proactive: having the reviewer actually build the feature with the developer, pairing together to program. The developer writes the code, and the reviewer discusses and critiques it in real time. This takes a lot longer than async reviews, but is generally more collaborative and gives the reviewer much more context on why the developer wrote the code she did.

Whether these sessions are proactive or reactive, reviewers are generally looking for the same things: code that’s clean and high quality. At smaller companies, this might be mostly a vibe check. The reviewer is experienced, knows what high quality code looks like, and uses her own judgement. Or, you know, is just too busy and says “looks good to me!” (LGTM).

But at larger companies, reviewer standards are often explicitly codified. Here’s an example of what Google looks for in code reviews. It’s not a vibe check anymore, it’s a fairly rigorous test that usually involves several people. There are specific, agreed upon standards of quality that reviewers are looking for; these take more time and effort to pass, and you’re more likely to need to make revisions to your first version.

The major issue with code reviews: they take too long

There’s one major issue with all of these kinds of code review: they’re slooooooooooow. There’s a lot of code to review, especially if you want to give it the proper time of day to be a helpful coworker. It takes time to get the context you need to accurately judge someone else’s code, which may be part of a section of the codebase you’ve never seen before. And you need to balance reviewing with your own engineering work that someone else is going to review.

Code review today is so slow that it often takes longer to do than the actual feature itself took to build. This is so commonly the cause of frustration for PMs, wondering why the feature that should have taken a week to build is still not ready after 3. Your engineers will tell you that they’ve already submitted the code for review, but have been waiting for comments for days. Meanwhile, their Instagram stories show them in the climbing gym at 3PM on a Tuesday. “Waiting for code review!”

And if it wasn’t bad enough, everything is about to blow up because of AI generated code. Almost all developers are using AI tools in some way, shape, or form to generate code. Rarely is a developer sitting down and writing 100% of their code anymore. Instead they’re using tools like Cursor and GitHub Copilot to write it for them. They are shipping more code, faster than ever – and are simultaneously more detached from their code than ever. How do you do a code review when the authoring developer barely even wrote it himself?

AI is coming for code reviews (but in a good way)

What if you could get the benefits of CI – quick, automated tests and reviews – while still being able to test for the more subjective things like code quality?

AI is the most promising thing in years when it comes to automating code reviews. It can exercise human-like subjective judgement without the Human Tax™, allowing developers to write good, clean code a lot faster. Instead of waiting for a few days for a peer to review your code, you can use an AI model to critique it and suggest changes.

But this is a lot more tricky than it looks. If you just paste your code into a foundation model like ChatGPT or Claude and ask it to critique for code quality, you’ll most likely get generic results.

These models don’t know your codebase, your organization’s standards, why you made this change, or any of that important context. All the things that your human coworkers do have. In theory, you could try including it yourself: prompt a model to look for high code quality (whatever your standards are), and then paste the code you just wrote into it.

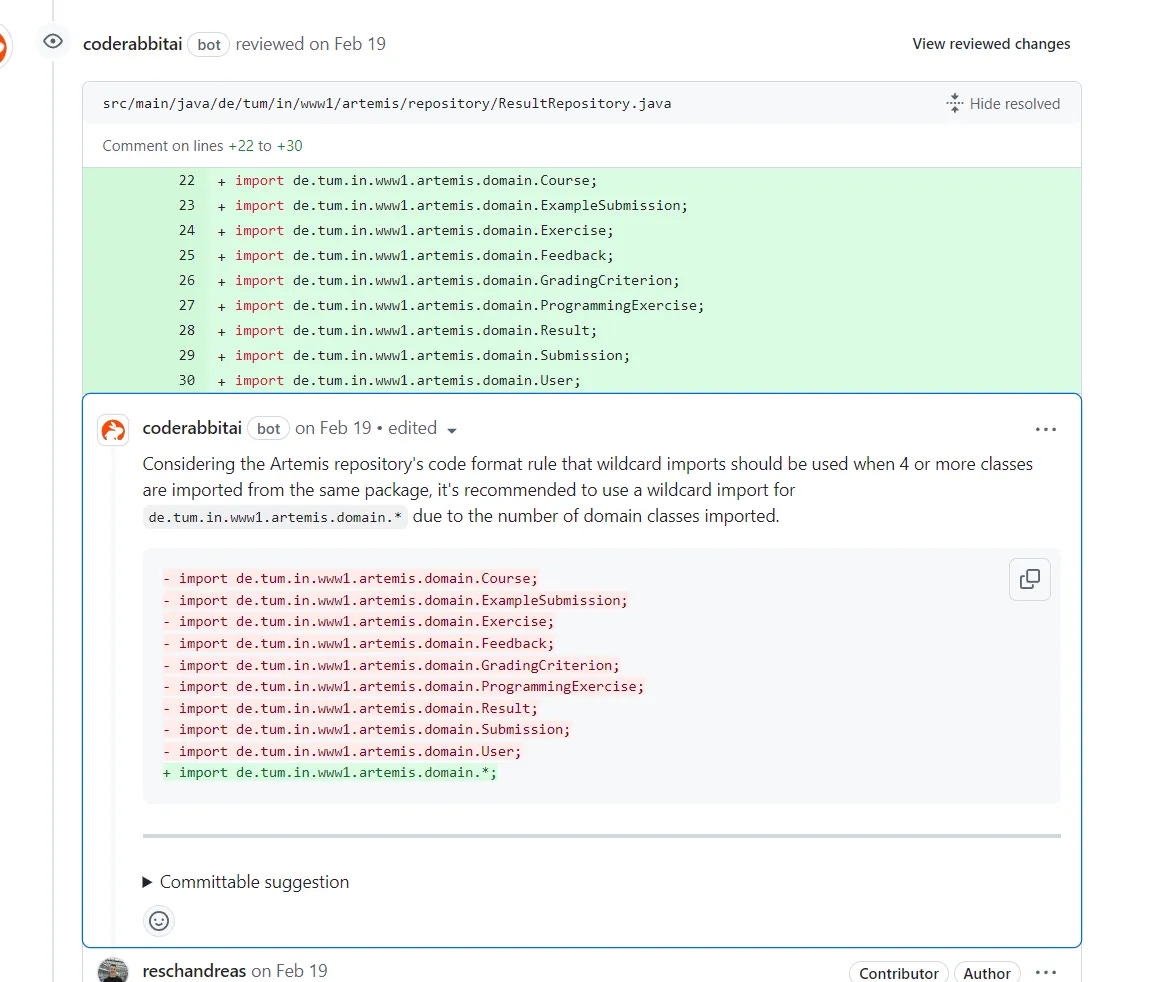

But instead, I’m seeing more developers use dedicated AI code review tools like CodeRabbit, the generous sponsor of this post. CodeRabbit uses AI to do code reviews for you. It packs various models with the context they need to make good critiques (more on this later), and integrates them directly into your workflow. Then, when you open a PR (or any time), CodeRabbit reviews your code, suggest changes, and even implement them for you.

One of the important things that CodeRabbit and tools like it get you is context: like what files have been changed in the past that are like this one, the description of the Pull Request that the authoring developer opened, and which open tickets are related to this code. CodeRabbit uses 20+ contextual inputs altogether.

They’ve also gathered universal standards for code quality, and applied them to their models; plus allow you to input what your organization’s guidelines are for code quality. More context = better judgements from the model on code quality. You can also correct CodeRabbit on the fly and tell it what to do differently next time:

If you want to get a developer’s perspective on how this changes their workflow, I highly recommend Tom Smykowski’s walkthrough post.

Because AI-based code reviews run immediately, they keep developers in the flow state. When you get immediate feedback on your code, you can stay locked into where you are in the codebase, not needing to context switch back to this feature after taking a few days on something else. You don’t only save time on the code review itself; you save it on any changes you need to make because of the code review.

Looking forward, I don’t think code review tools like CodeRabbit are going to completely replace the human element in code review – but they’re going to help a lot. And if we can reduce the amount of time code sits waiting for review, we can all get our features live faster and keep PMs happy.

In the meanwhile, you can try CodeRabbit for free (and it’s free forever for public repositories). It integrates with whatever source control you use (GitHub, Gitlab, etc.) and can be as cheap as $12 a month. Give it a try!