What is RLHF?

How AI models learned to stop being weird and start being helpful.

The TL;DR

Reinforcement Learning from Human Feedback (RLHF) is the secret sauce that transforms powerful but useless AI models into the helpful, coherent assistants we use today like ChatGPT.

LLMs are trained on the entire internet, which gives them a ton of knowledge…but not a clue how to use it

RLHF refines them by showing them what we think a “good” answer looks like, teaching them how to be helpful assistants

There are 3 steps to RLHF, starting with humans ranking different AI generated answers to common questions

RLHF is critically important and a major focus of most AI labs today

LLMs before RLHF: absolute genius toddlers

If you were playing with AI models a few years ago, before the ChatGPT moment – or if you’ve ever accidentally used the “base model” of any of the popular models today – you may remember what life looks like without RLHF. You might ask a simple question and get back a wall of text that was technically correct but completely missed the point. Or it might hallucinate wildly, confidently invent facts, or worse, regurgitate some of the green toxic sludge it learned from the darker corners of the internet.

These early models were like a genius who had read every book in the world but had zero social skills. They had all the information, but they had no concept of what was helpful, polite, or relevant to a human – nor how to be concise, like at all.

To understand why this is true, you have to remember that LLMs are essentially random word generators that are trained to be really, really good at the word-guessing game. When you give them a prompt, they simply use their internal probability matrices to generate the most likely next word, most likely next word after that, and so on and so forth. This response is purely based on what appears most on the model’s internet-scale training set; the model has no idea how to be concise, helpful, or any of the other things we value in our assistant-like LLMs.

For example, GPT-2 once tried naming mushrooms and came up with things like:

So, how did we get from that useless genius to the polished, helpful assistant that can write you an email, a poem, or a Python script?

Before RLHF, we must understand RL

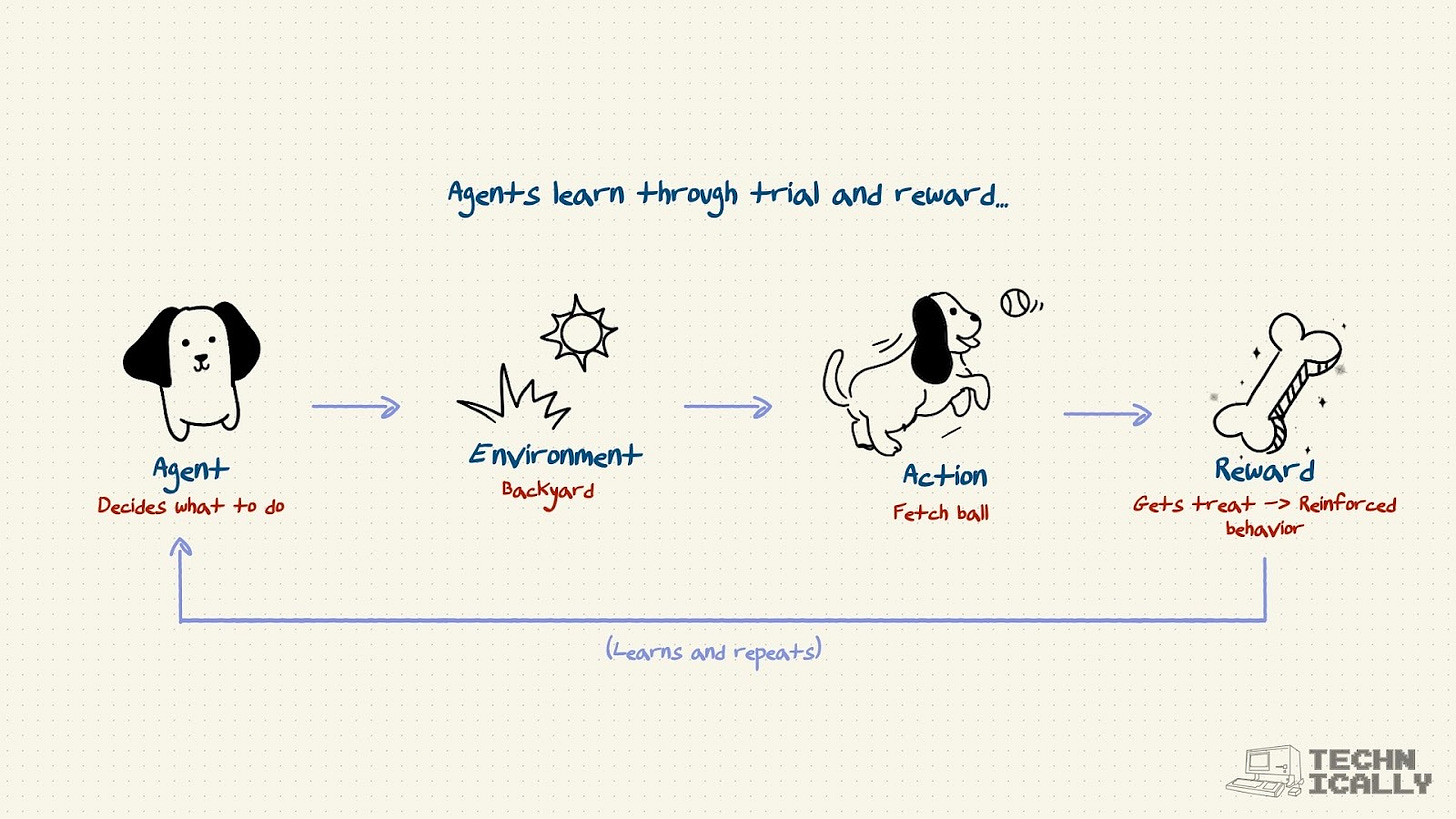

Before we add the “human feedback” part, let’s talk about Reinforcement Learning (RL) on its own. It’s a field of AI that’s all about teaching an “agent” (our AI model) to make good decisions to maximize a reward. And it has always been a bit of an odd step sibling of the more traditional methods of ML.

The easiest way to understand this is to think about training a dog. Imagine you’re teaching your new puppy (not a Doodle, please god) to fetch.

The Agent: The AI... I mean, the dog.

The Environment: Your backyard.

The Action: Mr. Dog can do lots of things—run, bark, dig up your prize-winning cilantro, or chase the ball.

The Reward: When Mr. Dog successfully brings the ball back, you give him a treat and say “Good boy!” That’s a positive reward. When he eats your herbs, you say “No!” That’s a negative reward (or lack of a treat).

Your dog isn’t a genius. He doesn’t understand physics or the emotional significance of your cilantro. He just learns, through trial and error, that one sequence of actions (chase ball -> pick up ball -> bring to human) leads to a precious treat. Over time, he’ll do that more and the other stuff less.

That’s Reinforcement Learning in a nutshell. You don’t give the model explicit instructions. You just give it a goal (get the reward) and let it figure out the best strategy. It’s how AI has learned to master complex games like Chess and Go, finding moves that no human had ever considered; and it is also the basis for RLHF.

Keep reading with a 7-day free trial

Subscribe to Technically to keep reading this post and get 7 days of free access to the full post archives.