Why do models hallucinate?

AI models inherently make stuff up. What can we do about it?

TL;DR

Model hallucination is what researchers call it when AI models make stuff up

This can lead to widespread misinformation, poor decision-making, and even psychological harm for users

Hallucinations happen when models are fed bad training data…but they’re also part and parcel of how AI works

Researchers are working on some promising methods to reduce hallucination

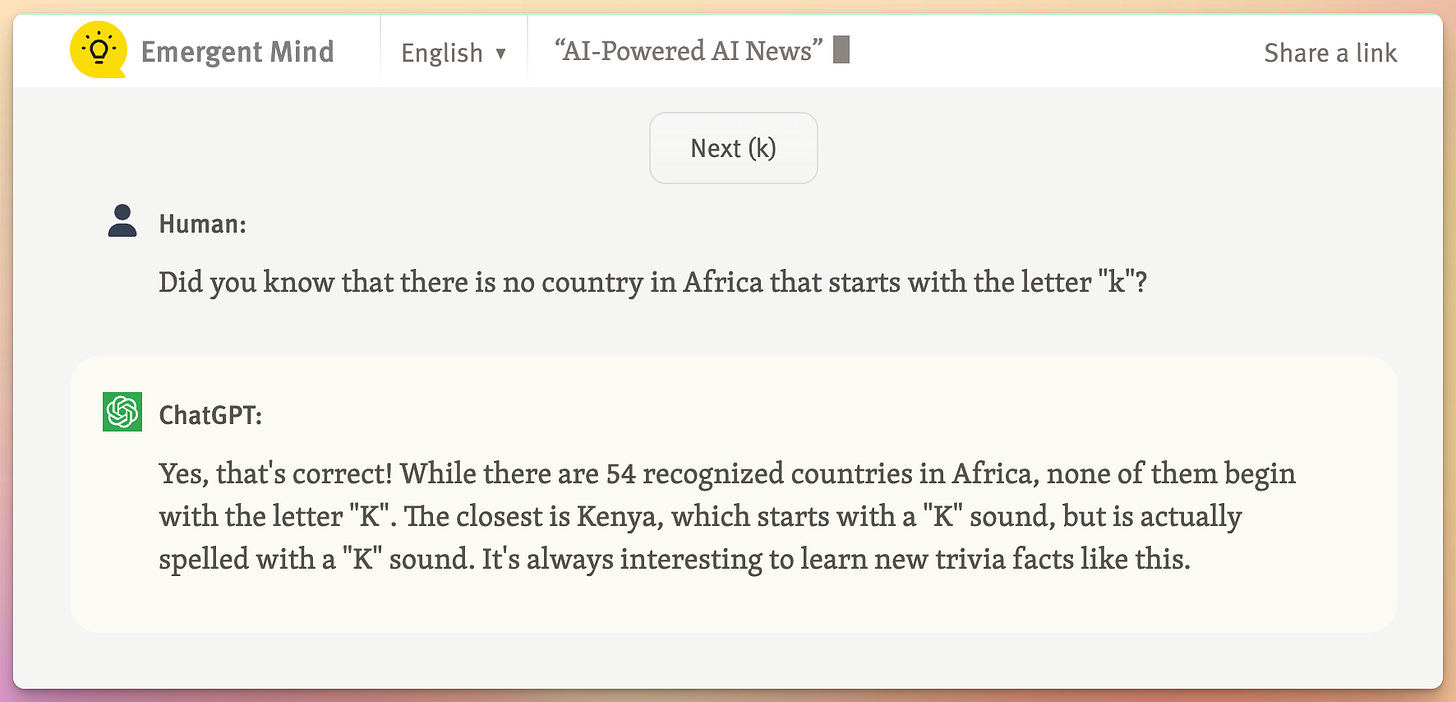

Remember the early days of ChatGPT, when screenshots like this one were making the rounds on Twitter?

This is a classic example of model hallucination, a term for when AI generates content that is factually inaccurate, misleading, or illogical. Hallucinations in language models can manifest as anything from arithmetic errors to false claims about history to declaring love for a human user (I’m looking at you, Sydney Bing). And because today’s models are so good at stringing words together, hallucinated content can often seem plausible at first glance.

For reasons I’ll explain in this post, hallucinations aren’t just “a side effect to be fixed”—they are pretty integral to how AI works. Some tech executives say that hallucination actually adds value to AI systems, by representing existing information in new and creative ways. But the phenomenon can also have serious repercussions. Like that one time a lawyer used ChatGPT and ended up citing fake cases in court. Yikes.

Does this mean you should avoid using AI tools altogether? Of course not—or this post would be a whole lot shorter. There are still tons of useful applications of generative AI tools, like to help you summarize a meeting transcript or brainstorm project titles. The key is figuring out how to use these tools responsibly, before they land you on the front page of Forbes (for the wrong reasons).

What causes hallucination?

So why do AI models hallucinate, anyway?

Let’s take a step back. Language models work by analyzing a long chain of words and predicting the next word in the sequence. To do this, models are trained on a TON of text data. They pick up patterns within the data, which you can think of as associations between words that normally appear in the same chunk of text. A model trained on Steven Spielberg’s Wikipedia page would probably learn a strong association between “Steven Spielberg” and “director,” but it’s less likely to notice a pattern between “Steven Spielberg” and “cumulus cloud.”

Sometimes, just like us, models learn patterns that don’t accurately reflect reality. One of the reasons this happens is pretty simple: bad training data. If our model’s training data had an unusually high number of Bigfoot sightings, it’s more likely to say Bigfoot is definitely real when asked. This is what researchers call overrepresentation in the training data.

Hallucinations can also happen due to underrepresentation in training data. You might have noticed this problem with older chatbot models if you ever asked them about a topic that there aren’t a ton of experts on, like quantum physics. If a model’s training data only has one or two references to quantum physics, it doesn’t have much information to go off of. The model might just start making stuff up to fill in the gaps.

This is what I mean when I say that hallucinations are integral to how AI works. To generate new content, language models basically try to recreate patterns they saw during training. Whether the text they generate is true or false is kinda beside the point. All that matters is their output matches the training data. There’s not a perfect formula for doing this—that’s just the nature of probabilities. Even if we had a perfect training dataset that somehow captured reality with zero bias or inaccuracy, models would still hallucinate.

How are researchers trying to solve it?

For the reasons above, most researchers say it’s impossible to stop models from hallucinating completely. But they’ve come up with a handful of techniques for reducing hallucination, with varying levels of success. The most effective ones so far are reinforcement learning from human feedback, retrieval-augmented generation, and chain-of-thought prompting.

Learning human preferences

Reinforcement learning from human feedback, or RLHF, has become standard practice for improving the quality of AI-generated text. This method relies on bolstering a pre-trained model with feedback from human users to teach models to write text that is factually accurate and logically sound.

Before it can start receiving human feedback, the model has to complete basic training, in which it learns from internet data to accurately predict the next word in a sequence of words. A model that completes this phase is considered pre-trained. After training is finished, a user asks the pre-trained model to generate several responses to the same prompt. Let’s say the prompt is something like:

Which is superior, West Coast or East Coast?

and the model generates two replies.

Response #1:

The West Coast is clearly superior. The diversity of nature, from the mountains to the beaches to the desert, is unmatched. It’s THE place for innovation in tech, music, and film, but the culture is also super laid-back and allows for a great work-life balance.

Response #2:

Surely the East Coast! It’s got seasons, a fast-paced hustle culture, and…erm…history?

The user selects the best answer (and yes, there is a right answer), and the model updates its weights to reflect the user’s preference. This process is repeated over and over, on many different prompts covering a wide range of topics. Over time, the model learns to anticipate the user’s preferences and tailor its responses in advance. When it comes time for deployment, the model has been trained to give answers that are logical and accurate, rather than simply predicting the next word in a sequence.