AI and neuroscience

AI models seem to approximate the brain, intentionally or otherwise

Like many of you I’ve been watching (listening to?) a lot of the Dwarkesh podcast over the past 6 months, and one theme that seems to come up a lot is the relationship between AI and the brain. The way we train and use GenAI models today strongly resembles how the pathways in the human brain actually work; and many neuroscientists and AI researchers believe the key to unlocking real superintelligence will lie in our ability to better understand and exploit that connection.

This post is going to explore a few ways in which this is true and explain some of these rather complicated ideas in more simple language.

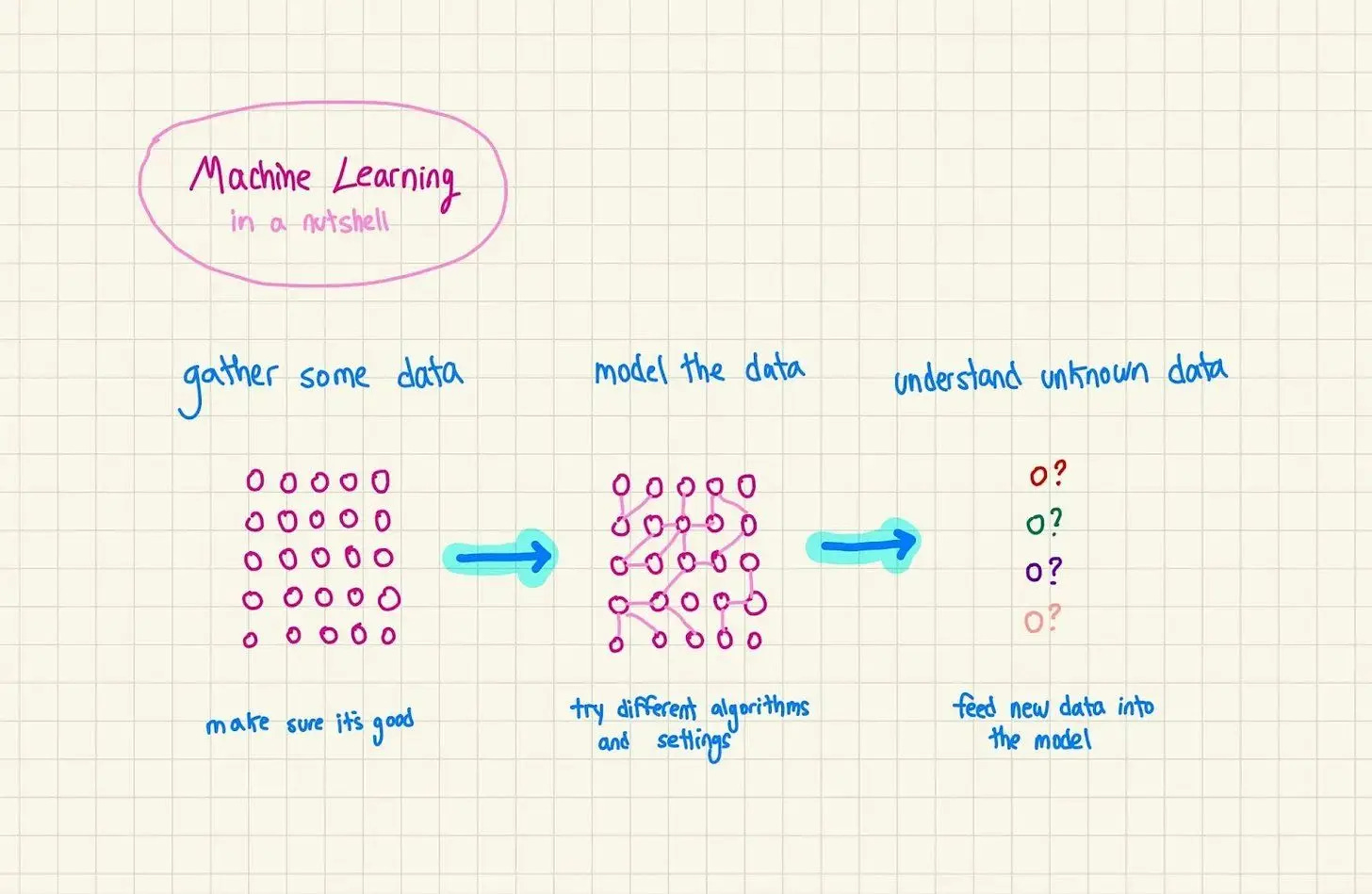

Neural networks, the basis for everything

The obvious place to start is neural networks, the architecture for pretty much all of the AI models that you use today like the GPT family, Claude, Nano Banana, and the like. Obviously the first word – neural – likens these models to the animal brain. The human brain has something in the range of 86B neurons, which are specialized cells that transmit nerve impulses; essentially the core unit of how our brains transmit information and signals. The idea is that neural networks work in kind of the same way.

And indeed, you’d be hard pressed to find an explanation of neural networks that doesn’t make an analogy to the human brain. Take, for example, Technically’s very own breakdown of neurons from the prolific Nicole Errera:

Neurons are the basic building blocks of AI architectures, modeled after the actual biological neurons that transmit signals throughout the human brain. Remember, AI models are essentially pattern investigators; they find the underlying pattern in the data. You can think of these neurons as the mathematical functions that are doing this hard investigative work, getting into the weeds of the data and figuring out what’s going on.

The math performed by individual neurons is actually pretty simple – it’s usually just basic multiplication and addition that you could do with a calculator. So how are AI models able to capture such complex patterns, like the ones involved in language and vision? The trick is to string together a lot of neurons – like hundreds of millions of them.

So in practice, the neural net in a model like GPT-5 does, at least loosely, resemble how a mammalian brain works. This is no accident. If you trace the history of the neural network you’ll end up back in 1943 (when I was born), when Warren McCulloch (neurophysicist) and Walter Pitts (mathematician) wrote a paper proposing a mechanism for how neurons might actually work.

To illustrate their hypothesis they modeled a simple neural net using electrical circuits. Further attempts culminated in a breakthrough at Stanford in 1959 when MADALINE (it’s an acronym) became the first neural network applied to a real world problem – eliminating echoes on phone lines. So in short, the fact that neural networks (roughly) approximate how the brain works is not an accident, this insight is core to their entire historical origin.

Now any neuroscientist worth their salt will tell you that there’s more that we don’t know about the brain than there is that we do know. The true inner workings of this organ are still really a mystery. And so it would be naive to argue that neural networks work in the same way that the brain works. But it’s safe to say that they’re inspired by what we do know about how the brain works, at least loosely.