How Vercel became the f̶r̶o̶n̶t̶e̶n̶d̶ AI cloud

And why building AI products requires new forms of infrastructure for developers.

This Thursday (10/23) is Vercel’s Ship AI conference, and I thought there’d be no better way to mark it than to give you something unprecedented for Technically: a three (yes, 3) post week.

We’ve got three (yes, 3) new pieces coming to you about the Vercel cinematic universe and why it’s so important for developers:

Today: How building AI apps requires different infrastructure than web apps

Tomorrow: The vibe coder’s guide to real coding — all the things you need to know to turn your vibe coded app into a reliable product

Thursday: How to build AI products that actually work (all about evals)

Enjoy!

—

In May 2023, I wrote a Technically post about one of my favorite software products, Vercel. At the time they had one focus, and they did it really well: making it easy for engineers to deploy their frontends, or the user-facing parts of their applications. In fact the award-winning1 Technically website runs on Vercel.

But since then, a lot has changed. The world has been taken over by AI (figuratively, if anyone from the future is reading this). The way people build their apps is drastically different than it was just a few short years ago. And to match, Vercel has built a bunch of really interesting new products that are getting traction fast. This post is going to talk about those products, what makes them interesting, and why you should care about them. Because I do!

How building AI apps is different from building app apps

If you asked a developer 5 years ago what it means to “build AI into your app” they’d probably have looked at you funny. At the cutting edge there were some people using what we then called Machine Learning to personalize some product experiences or provide some basic data-related suggestions. But all in all, it wasn’t top of mind for most people.

Today everyone is thinking about how to build AI into their apps. Models have gotten so good that they can now legitimately power brand new product experiences – across verticals like legal, healthcare, you name it. In fact I’d challenge you to find me a developer today who isn’t experimenting with LLMs in their apps. But what does it really mean to build AI into your app?

In English, it’s essentially using an AI model to do something in your app: summarizing text, generating a SQL query, or processing a document, among other things. On the surface this might seem simple…just prompt the model, right? Not so fast, cowboy. There are a few hurdles developers are running into where the tools that got us here aren’t taking us there.

The logistics of using AI models

Let’s start with the models themselves. Meaningfully building AI into your product experience isn’t as simple as pasting some API keys (ignore what you read on X). Here are some questions one must contend with:

What model do you use? OpenAI, Anthropic, or maybe something open source you host yourself? What if you want to use multiple?

How do you handle when things go wrong? What if a model takes too long to respond? What if the response is bad?

How do you pass relevant data from your application to the model to make responses customized?

How do you change out models quickly and cleanly when you want to try a new one?

Easier said than done.

Running untrusted code

A ton of the promise of AI models lies in their ability to write code for us. You might prompt a model to build a new feature for you, and it generates 1,000 lines of code to build it. Are you going to read through and understand every single line? If you answered “yes” why are you lying?

Developers use the term untrusted code to refer to code that they, well, don’t trust. It means the code hasn’t been manually reviewed and verified to be free of vulnerabilities and security risks. Generally, running untrusted code is a big no-no – hackers can inject it full of special trap doors to steal passwords, break applications, and even shut down servers.

When it comes to AI, essentially all code is untrusted. How – and where – do you run it without risking your computer, servers, or infrastructure?

Streaming, not request / response

If you’ve used ChatGPT or Claude, you’ve probably noticed that the models have what one might call a stream of consciousness. They generate their responses bit by bit, and in restaurant terms, bring the food out as it’s ready. This is because LLMs under the hood are essentially guessing word by word. Sometimes, for longer responses, it can take minutes.

Cool, except one problem: this is not at all how the pipes of the internet are built. The web as we know it – and all of the frameworks that developers use to build the apps you know and love – are built on the request / response model. You ask an API for some data, and it gives you back that data…all at once. It either works or it doesn’t.

And another thing – the request / response model isn’t very patient. APIs are supposed to give you answers in a matter of seconds, not minutes. Many web frameworks, and even serverless infrastructure like AWS Lambda, have maximum timeouts – or the number of seconds they’ll wait – that won’t go over a minute. Meanwhile, AI models can take several minutes to get you what you want…and while you’re waiting for them, you’re still paying for your infrastructure.

By the way, these last two problems – running untrusted code and long executing API calls – aren’t new. They’ve been challenging developers for years outside of AI contexts. But they’re part and parcel of building AI apps today, so there’s what one might call a renewed focus on them.

The AI cloud, not just the frontend cloud

So long story short, the way we build apps is changing. Vercel started out as the best way to build the user-facing part of your app, which we wrote about a couple years back. Since then they’ve tackled this “AI app building problem” from a few angles.

The Vercel AI SDK

Aptly named, the AI SDK helps you build apps with AI. It has answers to all of those above questions…and more. It helps you:

Use tons of different models from different providers like OpenAI and Anthropic, but without having to change your code (much)

Do common model-related tasks like generating text or transcribing audio with a few simple lines of code

Add structured data from your application to model prompts, so your responses are better

Here’s the thing about OpenAI and Anthropic. They are competing with each other. Their engineering teams are not getting together for barbecues and agreeing on how they’re going to build things. So if you want to use their models via API to build your app, you’re going to need to learn two of everything, Noah-style. Don’t do that. Use the AI SDK instead, and you can write code so simple, a (precocious) child could read it:

import { openai } from ‘@ai-sdk/openai’;

import { generateText } from ‘ai’;

const { text } = await generateText({

model: openai(’gpt-4o’),

prompt: ‘Invent a new holiday and describe its traditions.’,

});

console.log(text);

Another thing the AI SDK does is make it easy to build chat interfaces. For better or worse, it seems like everyone is standardizing around chat – talking to AI models to get them to do things. The AI SDK takes care of many of the hard backend-y parts of that, like streaming data and keeping track of your conversation. You can assemble these building blocks into whatever kind of chat app you want, like adding a helpful assistant to your Excel-killer startup (raising money now).

The Vercel AI Gateway

I talked earlier about how difficult it can be to switch out the particular AI model that your application is using. And if you’re one of those people who absolutely cannot get enough of the latest models – you’re the Simon Willison of your team – your desire to experiment is probably costing you a ton of engineering time. The AI SDK makes it easy to switch out models, but the AI Gateway makes it almost no sweat at all. You can think of it as a layer between your app and the typical AI model providers that acts as a bouncer of sorts: it makes it easy to switch models, set spend limits so you don’t go broke, and distribute traffic across multiple providers at once; think some users get an Anthropic model, some get an OpenAI one.

By the way, v0 – the easiest way to go from idea to app, which I wrote about a few months ago – is actually built on top of all of this stuff.

While we were at it…some backend stuff

While Vercel was building the AI SDK – and in general figuring out how to make it easier to build AI into your app – they developed two more features that are useful beyond just AI. For those two nagging problems I mentioned earlier, running untrusted AI-generated code and streaming long-running AI model responses, these are the answers.

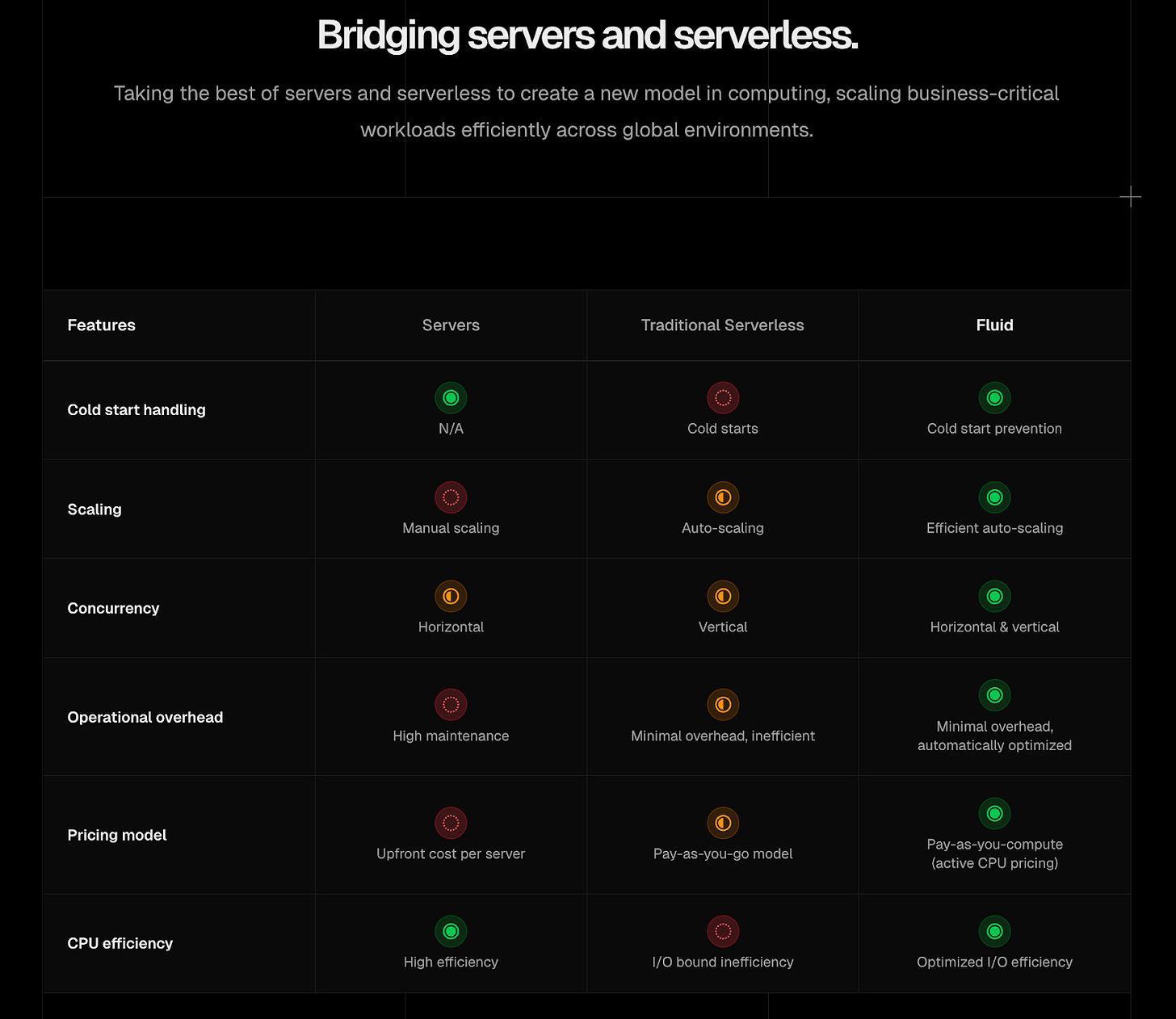

Vercel Fluid Compute for streaming model responses, among other things

Fluid Compute deserves its own dedicated post, since it’s a very interesting technology – essentially a hybrid model between serverless (you manage no servers) and server-like (you manage some servers). It gives you all of the nice benefits of serverless – no infrastructure to manage – but also some of the cost and performance benefits of old school servers. It’s serverless-ful-less™.

Vercel released Fluid Compute back in February of this year to a bit of fanfare and discussion. For the purposes of this post though…one of the big upsides of Fluid Compute is how it helps you build AI apps.

Vercel Sandboxes for running untrusted code

The aptly named Sandbox allows you to run untrusted code – it could be the most evil code in the world – without worrying about it breaking things. The way that it works is essentially intense isolation. The code runs in a totally locked down, solitary confinement style server (a tiny little one) where it can’t really do any damage to nearby programs or servers.

The best place to build AI apps

So to sum it all up, Vercel has released a lot over the past two years to make building AI into your apps easier:

The AI SDK – makes it easier to use different models and build chat interfaces

The AI Gateway – helps you switch out + use a tandem of AI models fast

Fluid Compute – a server/serverless hybrid that makes infrastructure more efficient for AI, + allows you to handle long running AI model requests

Sandboxes – allow you to run untrusted AI-generated code without breaking your app

You can use all of this stuff for free [etc. etc.]

Not award winning.